Next-Level AI

Some good headlines trigger fears, and so when Forbes recently ran the headline “Artificial Intelligence Is Already Causing Disruption and Job Losses at IBM and Chegg,” it caught our attention. The same article also says the World Economic Forum believes that AI will create 69 million new jobs. Either way, things are likely to change.

The following quote from Dr. Willms Buhse in this article (in German) sums it up similarly: “Artificial intelligence will not replace you — but the one who uses AI better will replace you.”

Next-Level AI: ChatGPT, OpenAI, Azure OpenAI, and Microsoft 365 Copilot

Generative AI, such as ChatGPT and technologies like OpenAI and Azure OpenAI will transform businesses and jobs. This article explores how machine learning and artificial intelligence generate and understand natural language with writing assistance, code generation, conclusions from data, and more.

Introducing ChatGPT

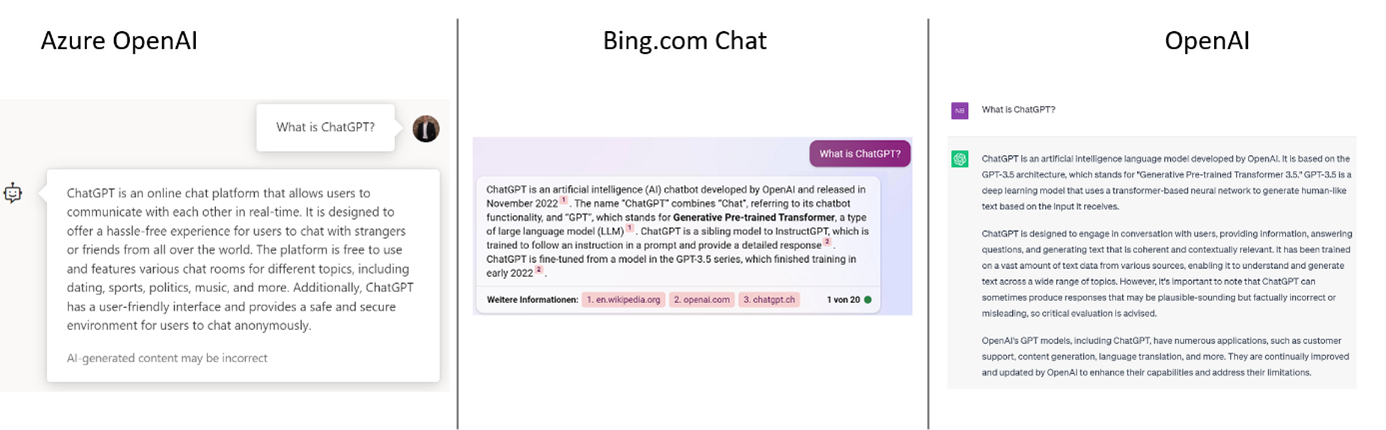

Azure OpenAI, Bing Chat, and OpenAI (not Microsoft) all use ChatGPT, but all answer “What is ChatGPT?” differently.

Azure OpenAI, Bing Chat, and OpenAI are all based on the same technology from the San Francisco-based company OpenAI. What is interesting and remarkable is that all three services provide differently worded and differently focused answers. One of the most important points in using these services is clear: the user is responsible for determining whether what the AI generates is accurate!

This is also what OpenAI itself tells you when you sign in for the first time: “While we have safeguards in place, the system may occasionally generate incorrect or misleading information and produce offensive or biased content. It is not intended to give advice.”

“It is not intended to give advice” — that's where the person who earns their money by advising others first takes a breath. However, this is a very short breather. Because finding answers to a customer's questions and forwarding answers to them is something that AI and services like ChatGPT can already do today. Soon they will do this better, faster, and more efficiently than humans. In the future, it will no longer be enough just to know something. The added value will be to assess and evaluate the knowledge, aggregate it with other information, and generate a benefit from it that the recipient can easily adopt.

Generative AI models like ChatGPT can only generate new content based on their training data. Therefore, they are not truly innovative or disruptive and do not have a deep understanding or knowledge of the world. Rather, they can also generate implausible and incorrect information.

Basic Terms

The following describes the basic notion of generative AI with some basic terms.

LLM

This acronym stands for (Large) Language Models. GPT-3 is an example. The models provide output based on indexed content and on probability calculations. GPT-3 was trained in October 2021 with the following data:

- Common Crawl (weight in index: 60%) includes data from the public internet.

- WebText2 (weight in the index: 22%) contains websites that were mentioned in Reddit posts. As a quality characteristic, the URLs must have a Reddit score of at least 3.

- Books1 (weight in index: 8%) and Books2 (weight in index: 8%) are two data sets of books available on the internet.

- Wikipedia Corpus (weight in index: 3%) contains English Wikipedia pages on various topics.

To ensure that the models deliver the best possible results right from the start, they are pre-trained. This is also where the name GPT was derived from. GPT = Generative Pre-trained Transformer. The training of these models is done via deep learning. Random values are used to generate an output. This computed output is then compared to an output that ideally humans should have verified. The model includes a feature through which the error or deviation from the ideal output can be returned as a correction. The model is trained such that the correct solution will probably apply if the same input is used again.

Prompt

A prompt is usually the user input that is then sent to the model to generate responses. In general, there are three roles when interacting with a model such as GPT-3:

- System: The System role welcomes and sets the context. Example: “Hello, I am your bot. How can I help?”

- User: The User role involves a user interacting with the system. Example: The user asks the question “Who founded Microsoft?” using the Bing Chat feature.

- Assistant: The Assistant role is the AI that provides answers. Example: The generated answer to the question is: “Microsoft was founded by Bill Gates and Paul Allen.”"

The prompt that the user creates is the prompt of the application that is being used. Just like a database query, the quality of the prompt determines the result. A bad prompt is formulated too generally or contains too much special terminology. Here are a couple examples:

- A very general prompt: “Please summarize the following article.”

- Better: “Please summarize the following article in 5 bullet points and list the sources quoted in the article.”

You can also tell the prompt which context it should take. The examples at Awesome ChatGPT Prompts show how this works. You can tell the prompt specifically what you want it to do. For example: “Act as a tour guide and tell me what I should see in Rome.”

This specific guiding of the prompt is called grounding. The model is guided to answer certain questions, to take up different aspects, or to include other factors.

GPT-3, GPT-4, and Other Models

GPT-4 is the most up-to-date version of OpenAI's GPT language model. Officially launched on March 14, 2023, it contains significantly more and newer data than the GPT-3.5-turbo version. Most importantly, it has new capabilities. It understands not only text, but also visual information and can recognize and interpret details in images.

This ability to understand visual information makes GPT-4 interesting for tasks such as image recognition, image labeling, and visually answering questions. The model can also generate images. By integrating text and image understanding, GPT-4 is thus now useful for industries such as marketing, design, and e-commerce. In this article, the OpenAI company says of its new model: “We spent 6 months making GPT-4 safer and more aligned. GPT-4 is 82% less likely to respond to requests for disallowed content and 40% more likely to produce factual responses than GPT-3.5 on our internal evaluations.”

The fact that LLM can handle formats other than text or speech isn't new. The challenge is to make the content of a video or MP3 file accessible to the model. Examples of this are:

- ChatGPT for YouTube

- chatpdf.com

- Visual ChatGPT

The GPT model is certainly the most popular one currently, but it is not the first or only one. The GitHub page https://github.com/Hannibal046/Awesome-LLM shows which models are available. Depending on the use case, specific models are available. Examples include the “Codex” model, which was trained on software code, or the “Galactica” model, a language model for scientific scenarios. The DALL-E2 model, also from OpenAI, is a model that creates realistic images and artwork based on a description in natural language. The app https://ki-nderbuch.de/, for example, takes advantage of this. After filling in some rudimentary information, the app generates a story including a drawing of the story’s context.

The Partnership Between OpenAI and Microsoft

Microsoft and OpenAI have a long-term partnership that began in 2016 and expanded in 2019 with a $1 billion investment from Microsoft in OpenAI. The partnership aims to advance AI research and development. In return, Microsoft embedded the LLM that was developed and trained by OpenAI into their Azure environment as a service and offers it to customers as Azure OpenAI.

The main difference between the two providers is that Azure OpenAI leverages Microsoft Azure security features, such as virtual networking, private endpoints and content filtering. Microsoft is co-developing the APIs with OpenAI to ensure compatibility and smooth integration of solutions into both services.

OpenAI and Azure OpenAI differ mainly in the following ways:

- Security: Azure OpenAI provides more security features, and data sent to Azure OpenAI stays within Microsoft Azure. Data is not shared with OpenAI (the enterprise).

- Regional availability: Azure OpenAI offers regional availability in three regions: East US, South Central US, and Western Europe. OpenAI currently offers only one global region.

- Access: Azure OpenAI requires that customers have clearly defined use cases that align with Microsoft's Principles for Responsible AI Use. OpenAI has a similar process, but with different criteria.

The choice between OpenAI and Azure OpenAI depends on your requirements and preferences. If security and regional availability are a high priority, Azure OpenAI is the better option. If more flexibility and convenience in using the language models are needed, OpenAI is recommended.

Microsoft 365 Copilot

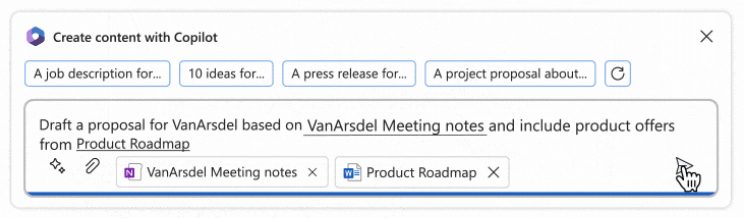

Microsoft 365 Copilot is the latest spin-off of services built on AI. Copilot will be integrated into Microsoft 365 including apps such as Word, Teams, Outlook, PowerPoint, and Excel. On the backend, the service combines data from Microsoft Graph and features from the GPT-4 model. Copilot supports daily work based on data coming from a Microsoft 365 tenant with the same features as ChatGPT.

Example of Microsoft 365 Copilot in Word

| Used with permission from Microsoft. View Full Size

One of the most frequent questions about Copilot and the Azure OpenAI service is whether the models are trained with customers’ data. Microsoft's statement is clear: neither your document content nor the descriptions you write are used for machine learning. For this and answers to other questions, see Frequently asked questions: AI, Microsoft 365 Copilot, and Microsoft Designer.

What Is the Difference Between Microsoft 365 Copilot and Microsoft OpenAI?

Microsoft 365 Copilot is an enterprise-ready app that leverages Azure OpenAI and Microsoft Graph. After getting a license for it, users can access it directly. Azure OpenAI, on the other hand, is a backend service for developing custom apps, using or customizing various LLMs, and thus creating a solution for very specific requirements and processes.

Technology Status: What Is Available? What Is Announced?

Anyone interested can easily apply for access to the preview program for Azure OpenAI. Generally, users get access after a few days. The latest language model in this preview is GPT-3.5-turbo. There is also a preview for GPT-4. However, access to the GPT-4 preview is currently only possible for selected customers.

At Inspire 2023, there was an announcement about which license packages will be available with Copilot. Copilot is available for purchase for Microsoft 365 E3, Microsoft 365 E5, Microsoft 365 Business Standard, and Microsoft 365 Business Premium customers for $30 per user per month. In addition to this announcement, Microsoft Sales Copilot was introduced. This is a version of Copilot that is designed for sales and that personalizes customer interactions. The third announcement was related to Bing Chat Enterprise. This version offers companies the possibility to use the generative AI Bing Chat, also under consideration of data protection aspects and thus in a compliant manner.

Furthermore, Microsoft introduced semantic index for Copilot. This index provides suitable answers even if input in the search box is imprecise or only very briefly formulated. The semantic index for Copilot requires at least a Microsoft 365 E3 or Microsoft 365 E5 license. In the same article, Microsoft also mentions the planned integrations in Whiteboard, PowerPoint, Outlook, OneNote, Loop, and Viva. In addition, there are versions for SharePoint and the announced Microsoft Security Copilot, to help mitigate IT security incidents.

Legal Aspects Related to ChatGPT and Other AI-Generated Responses

The following sections summarize key legal-related considerations for implementing AI solutions in a business context. These considerations are discussed in this newsletter (in German) from the law firm Luther.

ChatGPT and Data Protection

If information in the prompts to ChatGPT directly or indirectly indicate a person, ChatGPT processes this personal data. This use of ChatGPT becomes problematic under data protection law if either of the following occurs:

- Companies integrate it into their website.

- Companies build their own applications based on the language model technology and offer those applications to their customers.

In these cases, corresponding agreements under data protection law, such as an order processing agreement (AVV), must be concluded. However, the conclusion of an AVV is insufficient. Because all ChatGPT data gets transferred to servers in the USA, the EU standard contractual clauses must also apply. Companies should pay attention to whether additional safeguards are described. Also, they must conduct a transfer impact assessment regarding the USA data transfer in accordance with the requirements of the EU standard contractual clauses.

Guidelines For Employees and the Protection of Trade Secrets

Employees should know the risks and be instructed via a policy not to submit prompts to ChatGPT that contain sensitive data of any of their customers, suppliers, business partners, or work colleagues. It’s also necessary to monitor how data protection regulators will comment on generative AI language models such as ChatGPT.

In addition, the use of ChatGPT may also have an impact on the protection of trade and business secrets. For example, it cannot be ruled out that users may be tempted to mention trade and business secrets in the prompts. The motivation for this may be that the user wants to increase the quality of the output. For example, a user might mention a trade secret to get ideas for further product ideas or ideas for new services. However, ChatGPT may use prompts and output to develop or improve its services (depending on the characteristics of the AI service). Companies should therefore advise their employees not to disclose any business-critical information as a prompt to ChatGPT. Otherwise, this information could end up in the hands of competing companies and circumvent the company's own trade secret protection scheme.

ChatGPT and Copyright

Copyright-protected works require an author’s personal intellectual creation. Such a personal intellectual creation can only be based on a human achievement. This follows from Section 2 (2) of the German Copyright Act. Results created by AI-based applications such as ChatGPT are therefore, in principle, not protected by copyright. ChatGPT cannot be the author of the output generated by the AI language model. Accordingly, ChatGPT cannot grant users any (commercial) rights of use and exploitation.

AI Regulation Is Coming

Since April 2021, there has been a draft regulation and harmonization in the field of AI in the EU. The AI regulation also defines, among other things, in an annex what AI is and which risk classifications apply. Certain use cases are to be prohibited. For other scenarios, there will be extensive transparency, risk management, and documentation requirements.

Conclusion

Generative AI, such as ChatGPT and other techniques described here, will change many things. ChatGPT can be serve as a chatbot that can interact with humans in a natural way. This will enhance or complement areas such as customer service, education, entertainment, and other services. Models like Dall-E can create original and realistic images on demand that have never been seen before. This will revolutionize and inspire art, design, marketing, and other creative industries. Finally, this technology will transform businesses and jobs. As quoted in the beginning, “Artificial intelligence will not replace you — but the one who uses AI better will replace you.”

All of this creates both opportunities and responsibilities.