Power BI Implementation Models for Enterprises – Part 3 (Cloud-Friendly Clients)

This is article three in a series describing how I implement Power BI systems in enterprises. In my first article, I described how we categorize the clients that we work for. We allocated clients to one of the following categories:

- Cloud-native

- Cloud-friendly

- Cloud-conservative

- Cloud-unfriendly

In this article, I’ll describe how we work with the second group: the cloud-friendly clients.

Cloud-Friendly Clients

These are my second-favorite clients. They are also the most common group of clients.

They are happy to use cloud-based Software as a Service (SaaS) applications. They accept the idea that their data will live in cloud-based storage. But the data that they need to work with for analytics is not yet in the cloud. They might not even have plans to move it. Often, they just haven't made the move yet.

In this case, I'll only move the data that they need into the cloud.

Starting Point

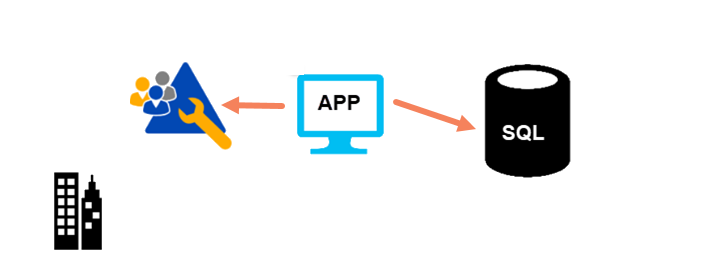

When I start working with these clients, their architecture often looks like shown in figure 3.

Most of these clients have Active Directory (AD) implemented. That could be either on-premises or in a private data center. They run their applications on premises. Their on-premises databases will be the source of data for our analytics work.

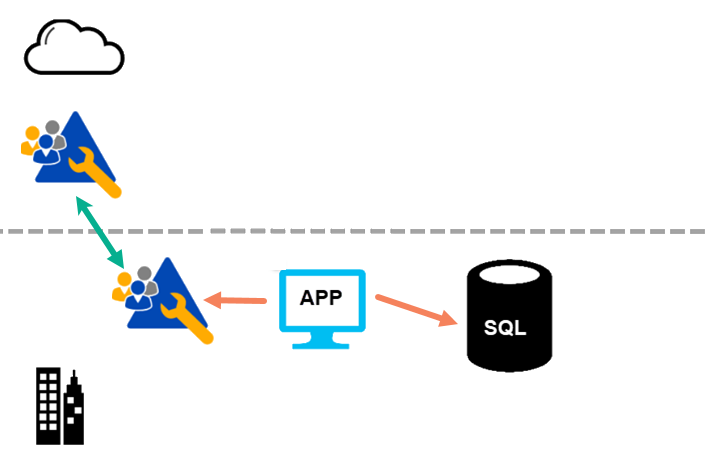

First Step: Establish Hybrid Identity

Many of the clients will already be using one of the Microsoft 365 products. They might be using Microsoft Word or Microsoft Excel. If they are, this is good news as they will already have a Microsoft Entra ID (formerly Azure ID) tenant in place. The exception is where they have used personal licenses for these products. If they have personal licenses, I encourage them to move to organizational licenses.

Because they are going to be using Power BI, they will need a Microsoft Entra ID tenant. If they already have one from Microsoft 365 or other product/service, great. If not, I create one for them.

I must make sure that their current Active Directory syncs with Microsoft Entra ID. The tool for that depends upon how far the client is in their cloud journey.

For many clients, Microsoft Entra Connect is the tool of choice. (It was Azure AD Connect). It allows you to still perform most management on-premises.

Microsoft Entra Cloud Sync is the more modern option. It suits clients that are happy to manage identity from the cloud instead of on-premises.

I recommend you check out the options available in both tools. There are different ways to sync, and you can choose the objects that are synced. You can even monitor the health of the sync setup.

You can choose to sync these ways:

- Sync password hashes. This sends the user's Active Directory password hash to Microsoft Entra ID. It allows Microsoft Entra ID to authenticate the user without knowing the password. It also avoids storing a password in the cloud.

- Pass-through. Users can have the same password on-premises and in the cloud. Microsoft Entra ID sends login details to Active Directory to resolve.

- Federation. Microsoft Entra ID becomes part of an Active Directory Federation Services (ADFS) setup. It is complex to set up and use.

Most of our clients sync password hashes.

Once hybrid identity is in place, the architecture looks like shown in figure 4.

Other SaaS applications (including Microsoft 365) will also be using Microsoft Entra ID as their identity provider.

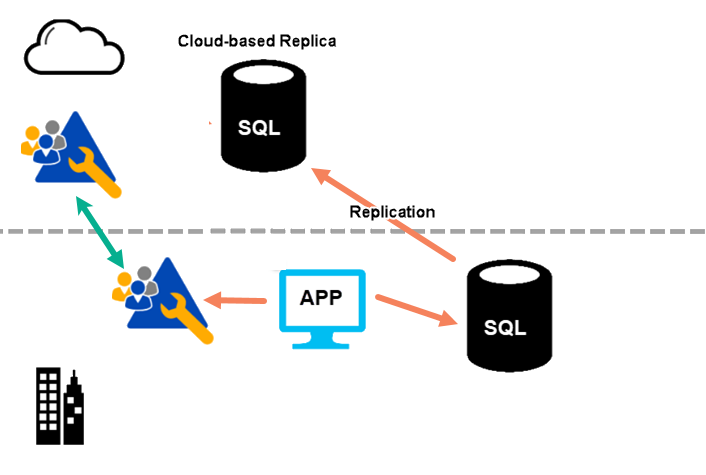

Second Step: Cloud-based Data Replica

The next thing I try to do is to get a copy of the source data into Azure with as low a latency as possible. If the source system is SQL Server, I use two methods:

Option 1: I use transactional replication (TR) to sync data into Azure SQL Database. In recent years, the SQL Server team has given us the ability to create a TR subscriber in an Azure SQL Database. This provides a simple, reliable option and gives me a cloud-based replica of the data I am interested in. It also allows IT personnel to manage the replica from the on-premises end.

Transactional replication suffers from what I call old pain. It was difficult to set up and to manage in the past. And people remember that. The option that I suggest here is very easy to work with.

Note: not all source applications will tolerate having transactional replication in place. If the client has a third-party application, I need to check with the authors of the application to see if this is ok.

Option 2: If I can't use transactional replication, I need another way to copy the data. I will establish an Azure SQL Database as a replica, and then I'll use a tool like Azure Data Factory (ADF) pipelines to copy the data. To access on-premises data, ADF needs an integration runtime (IR) installed.

While the ADF option is reasonable, I'll get lower latency with transactional replication.

In both cases, I will only sync data that is of interest for the analytics, not the whole database.

After the second step is implemented, the architecture looks like figure 5.

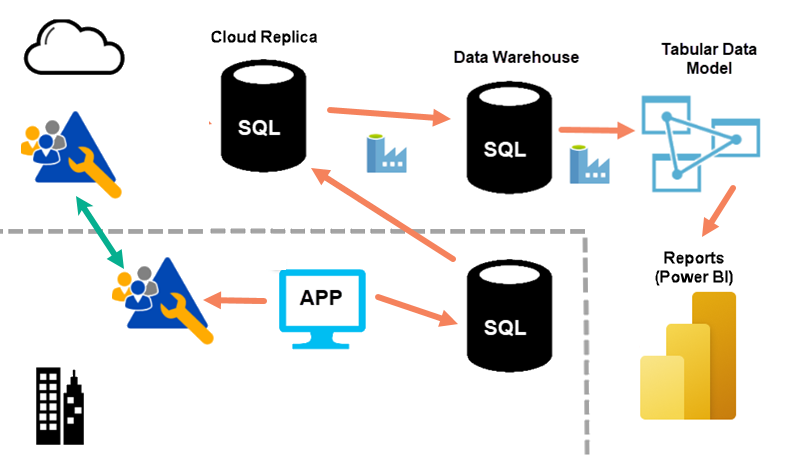

Third Step: Data Warehouse

The next steps are like what I've previously described with cloud-native clients.

The finalized architecture looks like figure 6.

Data Warehouse Structure and Purpose

I create a data warehouse in Azure using Azure SQL Database. While I could potentially use Azure SQL Managed Instance or Azure Synapse Analytics, the costs associated with this are rarely justified for this part of our projects. At the time of writing, I consider a Microsoft Fabric warehouse to not be sufficiently mature enough to use for this purpose. Azure SQL Database is a great fit for this, and it is both scalable and cost effective.

For most clients, I use a single data warehouse. In that warehouse, I will:

- reshape the data.

- cleanse the data.

- version the source data.

- align the data from many source systems if required.

I need to ensure that the data is ready to load into a tabular model. I want to avoid the need for further transformation.

The data warehouse contains areas that represent stages of processing. In data lake systems, I see discussion on medallion architectures. This is similar. I use schemas to separate these areas.

I'll discuss more about how I structure the data warehouse in a later article.

Tabular Data Model

For a tabular data model, I use either Azure Analysis Services or Power BI Premium.

I'm a fan of Azure Analysis Services. I find it has an appropriate blend of capability and cost. The exception to this is if the client already has, or can justify the cost of, Power BI Premium licensing. For us, that means clients who will have more than about 300 users of the final Power BI reports. Power BI Premium licensing is not currently cost effective for smaller clients.

In the future, semantic data models in Microsoft Fabric might provide the tabular data model that I need. At present, most of these clients are not using Microsoft Fabric yet.

Azure Analysis Services (AAS) is also a great point for connecting other tools. This includes Microsoft Excel and SQL Server Reporting Services (SSRS). Some clients are still using SSRS. Those connections can use the same row-level security.

Note: I do not recommend using AAS as the source of data for further transformation or ETL work. For example, some clients use tools like Tableau. I supply the data they need from the dimensional data warehouse, not from AAS.

Scheduled Data Movement

I use Azure Data Factory (ADF) to manage the movement of data from source systems into the data warehouse. I also use ADF to schedule this data movement.

For most clients, I need to schedule the processing of the tabular data model. Scheduling refreshes from within Power BI is not flexible enough. I use ADF to schedule this process.

Note: I do not use DataFlows in ADF to perform transformation. Most clients think that ADF is a low-cost service. Clients with cost concerns are those using DataFlows and/or the SSIS Integration Runtime. I avoid both.

The Azure-based alternatives to ADF are:

- Azure Synapse pipelines

- Microsoft Fabric data pipelines

ADF is currently more capable and flexible than either of these. This might change in the future but currently, I see ADF as a superset of Azure Synapse pipelines, and Azure Synapse pipelines as a superset of Microsoft Fabric data pipelines.

Power BI

Power BI is now the most popular visualization tool for analytics. Most clients that I work with are happy to use it but the architecture that we put in place would allow for the use of other analytic tools. In a later article, I will discuss how we implement visualization using Power BI.

Azure DevOps

It is important to manage development projects, and projects for implementing Power BI in enterprises are no different. If a client already has a tool for source control, versioning, and project management, I'm happy to work with whatever tool they prefer. When I am choosing a tool, I currently prefer to work with Azure DevOps. It provides the capabilities that I need at a very low cost, and integrates well with Microsoft Entra ID.

Summary

For projects targeting cloud-friendly clients, I typically use these tools from the Microsoft stack:

- SQL Server transactional replication for syncing data to the cloud replica

- Microsoft Entra ID (EID) for identity management

- Microsoft Entra Cloud Sync or Microsoft Entra Connect for identity synchronization

- Azure SQL Database (ASD) for creating a data replica and a data warehouse

- Azure Analysis Services (AAD) or Power BI Premium (PBIP) for the tabular data model

- Azure Data Factory (ADF) for moving data and for orchestrating data movement and processing, and in scenarios where transactional replication cannot be used

- Power BI (PBI) for reporting and visualization

- Azure DevOps (AzDO) for project management, source code control and versioning

And optionally:

- Microsoft Excel for ad-hoc queries over the analytic data model

- SQL Server Reporting Services (SSRS) or Power BI (PBI) for paginated reports

In the next article, I’ll describe how I change this for cloud-conservative clients.