Data Security in AI Development: Best Practices with Azure OpenAI

Artificial intelligence (AI) has become a transformative technology, powering applications and systems across various industries. As AI adoption increases, so does the volume and sensitivity of the data being processed. With data being a critical asset in AI development, ensuring its security and privacy is paramount. Both Azure and OpenAI, leaders in the AI space, offer robust solutions and best practices to safeguard data throughout the AI development lifecycle. In this article, I will explore the importance of data security in AI development, the potential risks involved, and the best practices provided by Azure and OpenAI to protect data and maintain trust in AI systems.

Understanding the Importance of Data Security in AI Development

Data is the foundation of AI development, serving as the fuel that powers machine learning (ML) algorithms and trains AI models. Whether it's images, text, audio, or other types of data, it contains valuable information that can be used to make intelligent decisions. However, with this value comes a significant risk: the potential for data breaches and unauthorized access.

Data security in AI development is critical for several reasons:

- Preserving privacy: Many datasets contain sensitive information about individuals, including personal, financial, and health-related data. Protecting this information is essential to maintain user trust and comply with data protection regulations.

- Guarding intellectual property: Companies invest significant resources in creating AI models and algorithms. Protecting their proprietary data and intellectual property is crucial to maintaining a competitive advantage.

- Avoiding bias and discrimination: AI models trained on biased or discriminatory data can perpetuate unfair outcomes. Ensuring data security includes minimizing biased data and using ethical AI practices.

- Mitigating financial and reputational risks: Data breaches can lead to financial losses and damage an organization's reputation. Implementing robust data security measures is essential for risk mitigation.

Actions to Take

To enhance the security and integrity of AI systems.

- Conduct regular risk assessments focusing on data handling in AI systems.

- Train staff on data security best practices and the importance of safeguarding AI data.

- Stay updated with news on AI data breaches to understand evolving threats.

Several potential risks threaten data security in AI development:

- Data breaches: Unauthorized access to sensitive data can lead to data breaches, exposing personal information and sensitive corporate data.

- Adversarial attacks: AI models are susceptible to adversarial attacks, where attackers manipulate input data to produce incorrect or malicious results.

- Model extraction: Competitors or malicious actors may attempt to extract the details of proprietary AI models by analyzing their responses to queries.

- Model inversion attacks: Attackers may infer sensitive information about individuals from AI model outputs, even if the model was not directly trained on that data.

- Unintended data exposure: Developers may inadvertently expose sensitive data during AI model training, evaluation, or deployment.

Actions to Take

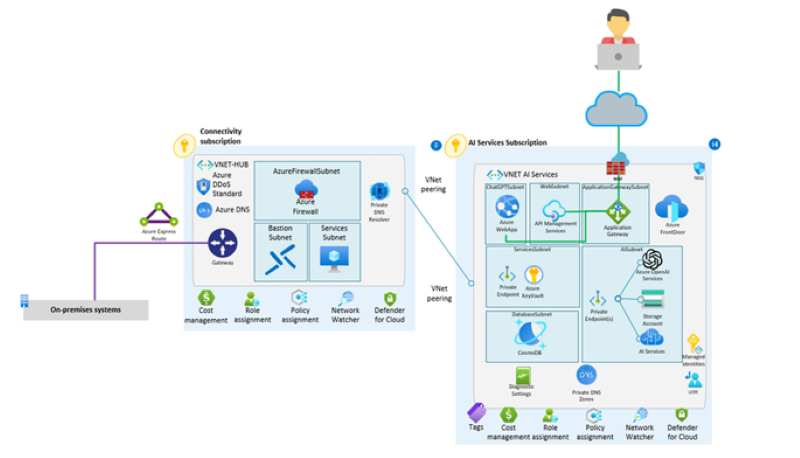

Enhance your Azure security posture with key actions: leveraging Azure’s encryption for data safety, configuring Azure Firewall and Virtual Networks for robust network protection.

- Use Azure’s encryption features for both data at rest and in transit.

- Set up Azure Firewall and configure Azure Virtual Network for enhanced network security.

Implement Microsoft Entra ID (formerly Azure Active Directory) and role-based access control (RBAC) to manage access controls diligently.

Best Practices for Data Security in AI Development

Use the best practices in this section to address potential risks and to help ensure data security throughout the AI development lifecycle.

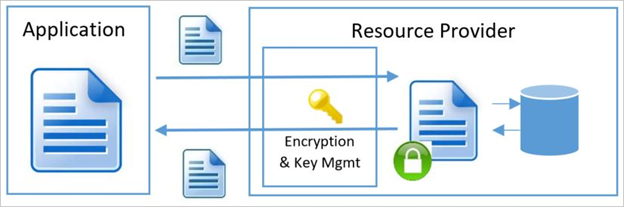

Implement Robust Data Encryption and Strict Access Controls

Implement strong data encryption mechanisms to protect data both at rest and during transmission. Use encryption algorithms to secure data storage, databases, and communication channels. Additionally, enforce strict access controls to ensure that only authorized personnel can access sensitive data.

Use Secure Data Storage Solutions and Encrypt Data During Transmission

Choose secure storage solutions for data, ensuring that data repositories and databases are protected from unauthorized access. Encrypt data during transmission using secure protocols like HTTPS to prevent eavesdropping and data interception.

- Data anonymization and privacy-preserving techniques: Anonymize or pseudonymize data whenever possible, especially when sharing datasets for collaborative AI development. Adopt privacy-preserving techniques like differential privacy to minimize the risk of identifying individuals from the data.

- Regular data audits and monitoring: Conduct regular audits of data access and usage to identify any unauthorized activities or potential security breaches. Implement robust data monitoring solutions to detect anomalies and unusual patterns in data access and usage.

- Secure AI model deployment and access: Securely deploy AI models in production environments, employing RBAC to restrict access to authorized users only. Regularly update and patch AI model deployment environments to address security vulnerabilities.

Actions to Take

- Review and adhere to OpenAI’s security protocols when integrating their AI models.

- Ensure ethical use of AI by aligning with OpenAI's ethical guidelines and frameworks.

- Regularly update and review access to OpenAI services in your applications.

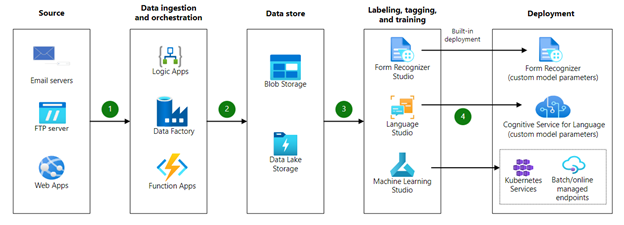

Data Security in Azure AI Services

Microsoft Azure provides a wide range of AI services with built-in data security features. Here are some key Azure services and features that enhance data security in AI development:

- Azure confidential computing: Azure confidential computing allows you to protect sensitive data while it's being processed. It uses trusted execution environments (TEEs) to ensure that data remains encrypted and protected even while in use by the AI model.

- Azure Key Vault: Azure Key Vault enables secure storage and management of cryptographic keys and secrets used for data encryption and access control. It ensures that keys are well protected and not directly accessible, reducing the risk of unauthorized access.

- Entra ID: Entra ID provides identity and access management services, enabling you to control access to Azure resources and AI services based on user identities and roles.

- Azure Security Center: Azure Security Center offers continuous monitoring and threat detection for Azure resources, including AI services. It provides insights into potential security vulnerabilities and recommends actions to improve security posture.

Actions to Take

Enhance your organization's data security and compliance with these key actions:

- Implement strict RBAC policies.

- Schedule and conduct regular security audits and compliance checks.

- Encrypt all sensitive data, prioritizing end-to-end encryption methods.

- Deploy AI-driven anomaly detection systems to monitor data usage and access patterns.

Data Security with Azure OpenAI

OpenAI also emphasizes data security and privacy in AI development. As an AI developer working with OpenAI, consider implementing actions to safeguard data being ingested in Azure OpenAI.

Your prompts (inputs), completions (outputs), your embeddings (a special format of data representation that ML models and algorithms can use), and your training data:

- Are NOT available to other customers.

- Are NOT available to OpenAI.

- Are NOT used to improve OpenAI models.

- Are NOT used to improve any Microsoft or third-party products or services.

- Are NOT used for automatically improving Azure OpenAI models for your use in your resource. (The models are stateless, unless you explicitly fine-tune models with your training data.)

- Your fine-tuned Azure OpenAI models are available exclusively for your use.

Azure OpenAI Service is fully controlled by Microsoft; Microsoft hosts the OpenAI models in the Microsoft Azure environment, and the service does NOT interact with any services operated by OpenAI.

Implement API Security

- Secure API endpoints for interacting with OpenAI models.

- Use HTTPS for encrypted communication.

Adhere to Standard Data Handling Policies

- Adhere to ethical guidelines and data privacy standards.

- Ensure that data used in OpenAI models complies with data protection regulations.

Ensure Model Security

- To address any security vulnerabilities, make regular updates to AI models.

- Ensure that models are robust against adversarial attacks.

Actions to Take

- Set up a secure Azure environment, ensuring that all configurations align with security best practices.

- Integrate OpenAI models carefully, monitoring data flow and access.

- Establish a routine for continuous monitoring and updating of security measures in your AI applications.

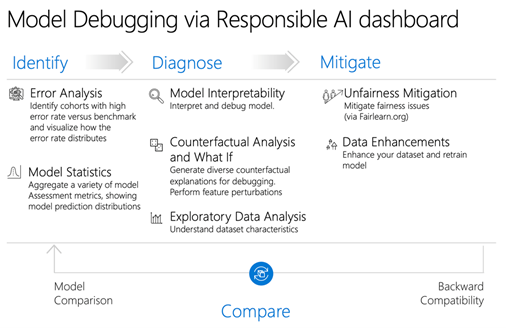

Responsible AI Practices for Azure OpenAI Models

To responsibly deploy Azure OpenAI models, follow these best practices:

- Identify potential harm: Prioritize potential harms through red-teaming, stress-testing, and analysis, focusing on specific models and application scenarios.

- Diagnose: Develop systematic measurement methods, both manual and automated, to gauge the frequency and severity of identified harms.

- Mitigate harm: Implement layered mitigations at model, safety system, application, and positioning levels, and continually measure their effectiveness.

These practices align with the Microsoft Responsible AI Standard and are informed by the National Institute of Standards and Technology (NIST) AI Risk Management Framework.

Data Security Compliance and Regulations

In addition to implementing best practices, AI developers must comply with data security regulations and standards that govern the use and handling of sensitive data. Here are some key data security compliance considerations for AI development.

GDPR and Data Protection

The General Data Protection Regulation (GDPR) is a comprehensive data protection regulation that applies to organizations handling the personal data of European Union (EU) citizens. GDPR mandates that organizations must protect personal data, obtain explicit consent for data processing, and ensure data subjects have the right to access, rectify, and erase their data.

When working with AI and personal data, AI developers must ensure compliance with GDPR requirements. This includes implementing data protection measures, obtaining consent for data processing, and adhering to the principle of data minimization to limit the collection of personal data.

HIPAA and Healthcare Data

For AI applications in the healthcare industry, compliance with the Health Insurance Portability and Accountability Act (HIPAA) is crucial. HIPAA sets standards for the security and privacy of protected health information (PHI). AI developers working with healthcare data must ensure that PHI is encrypted, access to PHI is restricted to authorized personnel only, and proper audit trails are maintained for data access.

Other Industry-Specific Regulations

Various industries have specific data security regulations that AI developers must comply with. For example, financial institutions may need to adhere to the Payment Card Industry Data Security Standard (PCI DSS) for the protection of credit card data. Similarly, organizations in the education sector may need to comply with the Family Educational Rights and Privacy Act (FERPA) to protect student data.

It is essential for AI developers to be aware of the industry-specific regulations relevant to their applications and implement the necessary data security measures accordingly.

Conclusion

Data security is a critical aspect of AI development, and it plays a vital role in building trust in AI systems. Azure and OpenAI provide robust solutions and best practices to ensure the security and privacy of data throughout the AI development lifecycle.

By following best practices such as data encryption, access controls, data anonymization, and regular data audits, AI developers can protect sensitive data from unauthorized access and mitigate potential risks. Leveraging Azure's AI services, such as Azure Confidential Computing, Azure Key Vault, and Azure Security Center, provides an additional layer of security for AI applications.

Moreover, data security compliance with regulations such as GDPR and HIPAA is essential for AI developers, especially when dealing with personal and sensitive data. Adhering to industry-specific regulations ensures that AI systems meet the highest standards of data protection in their respective sectors.

As AI continues to advance and become more integrated into various aspects of our lives, data security will remain a top priority. AI developers must stay vigilant and proactive in implementing data security measures to protect data integrity, confidentiality, and privacy. By doing so, they can build AI systems that are not only powerful and effective but also trustworthy and respectful of individuals' data rights. With a strong focus on data security and compliance, AI has the potential to drive positive transformations across industries while maintaining the highest ethical and privacy standards.

Final Recommendations

- Regularly review and update your AI data security measures.

- Stay informed about the latest developments in AI and data security.

- Engage with the community to share knowledge and learn from best practices.

References

Data, privacy, and security for Azure OpenAI Service