Azure OpenAI in Action

Artificial intelligence has been around for a very long time. There are references from the year 1748 when it was first mentioned, but now with ChatGPT and the endlessly emerging solutions, AI is on everyone's lips, and it is easily accessible and applicable.

Microsoft has fully embraced the trend and makes it very easy for companies to apply AI without having to train their own models, but Microsoft also offers many integrations into their own products through countless copilots. This will fundamentally revolutionize how we interact with programs, operating systems, and the internet, as well as how we produce content. The most prominent example is the Microsoft 365 Copilot, which helps companies significantly increase their productivity through deep integration into all Microsoft 365 services.

However, for those who want to build their own copilots or solutions based on OpenAI's LLM models, Microsoft also offers to let applications integrate with Azure OpenAI Services. This is an LLM as a service. Unlike the services from OpenAI, Microsoft offers a full level of data privacy with SLAs.

Deploy Azure OpenAI Services

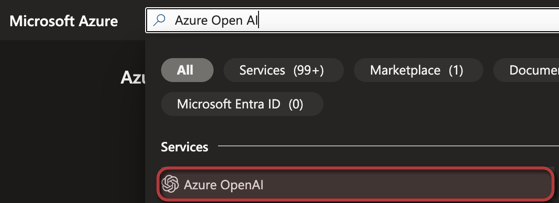

Before delving into practical use cases, it's crucial to understand the deployment process for the Azure OpenAI Services. To initiate the deployment of the service in the Azure Portal, you must first complete an opt-in form. This step is necessary to enable your subscription for service deployment. You can use https://aka.ms/oai/access. After submitting the opt-in form, you can expect to receive a confirmation within a few hours or days, indicating that your subscription is ready for use. Once you receive this confirmation, you can begin the deployment process. To do so, simply log in to the Azure Portal at portal.azure.com and search for “Azure OpenAI” (figure 1) to proceed with the setup.

The next step in the deployment process is to click +Create (figure 2) to create a new resource.

In the resource creation wizard within the Azure Portal (figure 3), you'll need to select the specific subscription that you previously enabled for Azure OpenAI Services. Also, choose the resource group where you want to deploy the resource, as well as the region. Keep in mind that different models may be available in various regions, so choose accordingly. Then, enter a name for your resource. For the pricing tier, select “Standard S0.” After these selections, click Next and follow the remaining steps to complete the deployment process.

Usage of the Azure Open AI Services

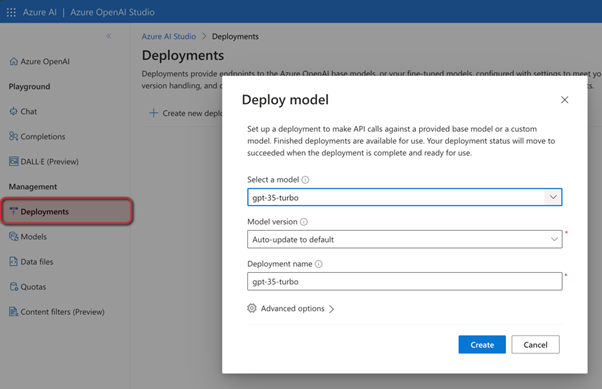

With the service successfully deployed, the next step is to deploy the model. To do this, access the Azure Open AI Studio by navigating to https://oai.azure.com/. Once there, go to the “Deployments” menu and click + Create new deployment. Here, you will select the model you wish to deploy. Enter a name for this deployment and then click Create to initiate the process.

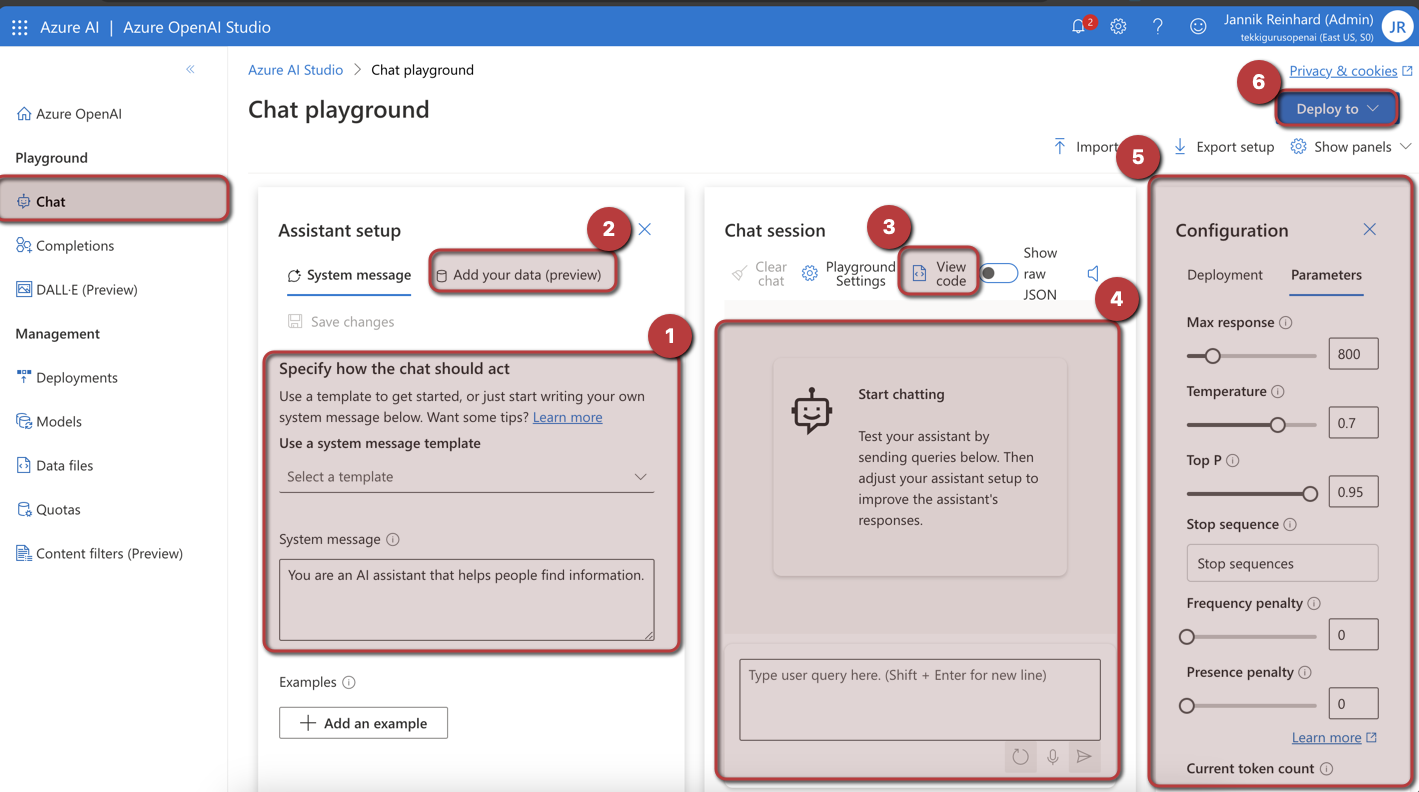

You can use the Azure OpenAI Studio to test your prompts (see the Chat playground in figure 5), play around with different parameters and to link your custom data with the model.

- System Message Settings: This section allows you to set a system message that guides the model towards a specific tone or persona. You can also provide instructions on how the model should respond.

- Custom Data Integration: In this area, you have the option to link your custom data for interaction. For instance, you can store data in a blob storage, which must then be indexed using Azure AI Search (formerly known as cognitive search).

- Code Generation: This feature enables you to generate code in various programming languages considering your configurations. You can copy and paste this code directly into your script.

- Interactive Playground: Similar to ChatGPT, this is an interactive space where you can input a message and receive a response from the model.

- Parameter Adjustments: The Parameters section of the Configuration area lets you adjust the hyperparameters of the model, such as the temperature, which controls the model's creativity level.

- Easy Chatbot Deployment: Utilizing the Deploy to button, you can effortlessly deploy your custom chatbot to an app service, leveraging your data and configurations.

How to Use Azure OpenAI Services in Action

Now that all the prerequisites are completed, you can now use your Azure OpenAI resource. Amidst the immense hype surrounding large language models (LLMs), with AI being a hot topic in every discussion, the critical task at hand is to identify real, tangible use cases. It's essential to explore how these advanced AI capabilities can bring significant benefits to your company.

When identifying use cases for LLMs in your company, it's advisable to begin with a small pilot project. Often, using the playground feature to test the LLM's performance with typical prompts is a good start. If your use case requires specific knowledge, consider grounding the prompts with custom data. If the initial tests show promising results, the next step is to scale up by developing a small proof of concept (POC) implementation. This phase allows for further testing and refinement of the implementation.

The real challenge lies in transitioning from a successful POC to a fully operational production environment. To achieve this, it’s crucial to establish continuous improvement and validation processes. These processes ensure that the model remains effective and accurate over time, adapting to new data and evolving use cases.

Example Use Case Ideas

- Customer Service Enhancement: Implement AI-powered chatbots using LLMs to provide instant, 24/7 customer support. These bots can handle a wide range of queries, offering quick resolutions and improving overall customer satisfaction. When you combine them with automations and you company data this could be a very beneficial and time saving use case

- Content Creation: Use LLMs for generating high-quality written content, such as reports, articles, or social media posts, but you can also use LLMs to generate pictures or write some software code.

- Data Analysis and Insights: Leverage LLMs to analyze large datasets, extracting key insights and trends. This can be particularly useful in market research, financial analysis, or customer feedback evaluation. You can do this without data science knowledge.

- Language Translation: If you provide services in multiple languages and the quality of traditional translators was not sufficient for you, your business could replace manual translation with translation by LLMs. They have the benefit that they understand the context and create much better translations.

- Programming and Code Review: Use LLMs to assist in software development by generating code snippets, reviewing code for errors and vulnerabilities, and suggesting optimizations, thereby improving development efficiency and reducing time-to-market.

- Large Documentation Review and Summarization: Summarize large documents like legal and compliance documents, ensuring accuracy and adherence to regulations while saving time and resources to read through the whole text.

- Predictive Maintenance in Manufacturing: Utilize LLMs to analyze machinery data, to early detect issues and auto remediate them.

These are only some examples to inspire you but there are unlimited possibilities and use cases that you can implement by leveraging the full power of LLMs like the ones from OpenAI.